Introduction

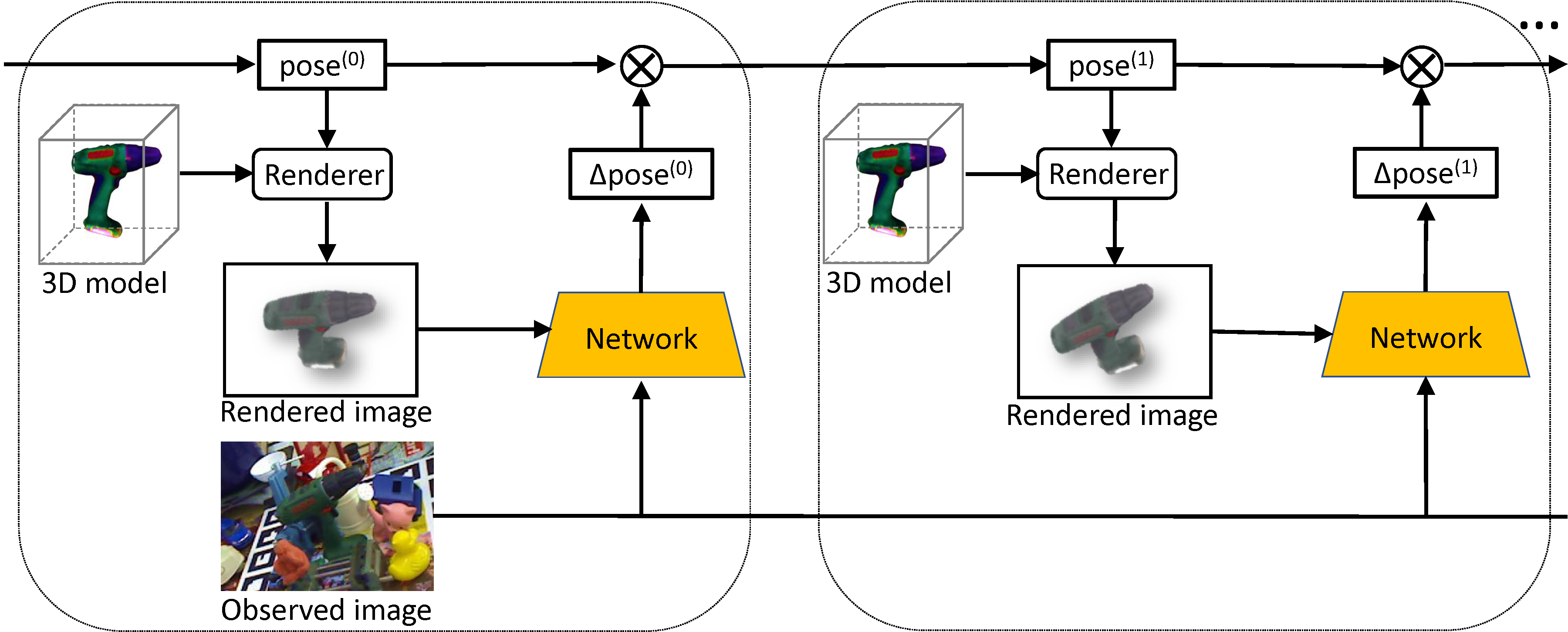

Estimating the 6D pose of objects from images is an important problem in various applications such as robot manipulation and virtual reality. While direct regression of images to object poses has limited accuracy, matching rendered images of an object against the input image can produce accurate results. In this work, we propose a novel deep neural network for 6D pose matching named DeepIM. Given an initial pose estimation, our network is able to iteratively refine the pose by matching the rendered image against the observed image. The network is trained to predict a relative pose transformation using an untangled representation of 3D location and 3D orientation and an iterative training process. Experiments on two commonly used benchmarks for 6D pose estimation demonstrate that DeepIM achieves large improvements over state-of-the-art methods. We furthermore show that DeepIM is able to match previously unseen objects.

Publication

Yi Li, Gu Wang, Xiangyang Ji, Yu Xiang and Dieter Fox. DeepIM: Deep Iterative Matching for 6D Pose Estimation. In European Conference on Computer Vision (ECCV), 2018. arXiv, pdf (Oral)

Code

Available soon.

Result Video

Acknowledgments

This work was funded in part by a Siemens grant. We would also like to thank NVIDIA for generously providing the DGX station used for this research via the NVIDIA Robotics Lab and the UW NVIDIA AI Lab (NVAIL). This work was also Supported by National Key R&D Program of China 2017YFB1002202, NSFC Projects 61620106005, 61325003, Beijing Municipal Sci. & Tech. Commission Z181100008918014 and THU Initiative Scientific Research Program. Ongoing research work that extends DeepIM is partially funded by the Honda Research Institute USA.

Contact : liyi10.d at gmail.com

Last update : 07/27/2018