To robustly handle liquids, such as pouring a certain amount of water into a bowl, a robot must first be able to perceive and reason about liquids in a way that allows for closed-loop control. Liquids present many challenges compared to solid objects. For example, liquids can not be interacted with directly by a robot, instead the robot must use a tool or container; often containers containing some amount of liquid are opaque, obstructing the robot’s view of the liquid and forcing it to remember the liquid in the container, rather than re-perceiving it at each timestep; and finally liquids are frequently transparent, making simply distinguishing them from the background a difficult task. Taken together, these challenges make perceiving and manipulating liquids highly non-trivial.

Recent advances in deep learning have enabled a leap in performance not only on visual recognition tasks, but also in areas ranging from playing Atari games to end-to-end policy training in robotics. In this work, we investigate how deep learning techniques can be used for perceiving and reasoning about liquids. We developed a method for generating large amounts of labeled pouring data for training and testing using a realistic liquid simulation and rendering engine, which we used to generate a data set with over 4.5 million labeled images. Using this dataset, we showed that deep learning can be successfully applied to the task of perceiving and reasoning about liquids.

Results:

Liquid Simulation Dataset:

You can download the dataset here.

Results on Real Data:

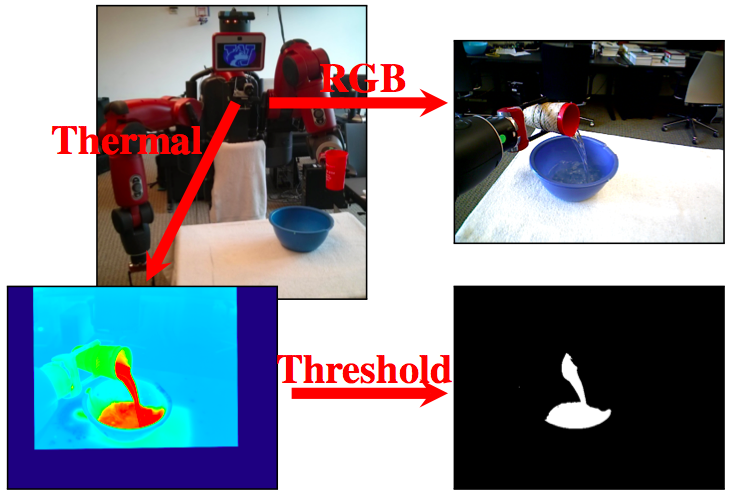

Recently we’ve begun collecting a dataset on our Baxter robot performing pouring tasks. We mounted an Asus Xtion Pro RGB-D camera to the robot’s chest alongside an ICI 8640P thermographic camera. The RGB-D camera provides color images for input to our neural networks and the thermographic camera provides ground truth pixel labels (we used heated water). The figure below shows our setup.

Here are some preliminary results showing the output of the LSTM-CNN on the real data.

Papers:

Schenck, C., Fox, D., “Towards Learning to Perceive and Reason About Liquids,” In Proceedings of the International Symposium on Experimental Robotics (ISER), Tokyo, Japan, October 3-6, 2016. [Caffe Network Files][ArXiv]

Schenck, C., Fox, D., Detection and Tracking of Liquids with Fully Convolutional Networks, In Proceedings of Robotics Science & Systems (RSS) Workshop Are the Skeptics Right? Limits and Potentials of Deep Learning in Robotics, Ann Arbor, Michigan, USA, June 18-26, 2016. [ArXiv]