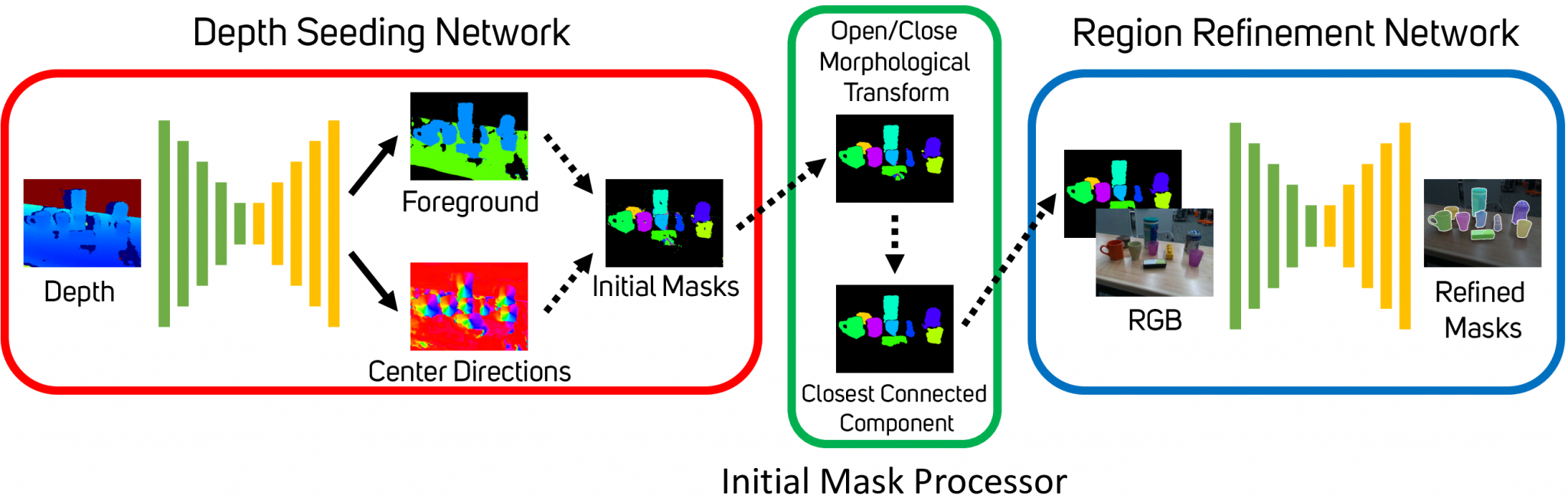

In order to function in unstructured environments, robots need the ability to recognize unseen novel objects. We take a step in this direction by tackling the problem of segmenting unseen object instances in tabletop environments. However, the type of large-scale real-world dataset required for this task typically does not exist for most robotic settings, which motivates the use of synthetic data. We propose a novel method that separately leverages synthetic RGB and synthetic depth for unseen object instance segmentation. Our method is comprised of two stages where the first stage operates only on depth to produce rough initial masks, and the second stage refines these masks with RGB. Surprisingly, our framework is able to learn from synthetic RGB-D data where the RGB is non-photorealistic. To train our method, we introduce a large-scale synthetic dataset of random objects on tabletops. We show that our method, trained on this dataset, can produce sharp and accurate masks, outperforming state-of-the-art methods on unseen object instance segmentation. We also show that our method can segment unseen objects for robot grasping.

Publication

The Best of Both Modes: Separately Leveraging RGB and Depth for Unseen Object Instance Segmentation.

Christopher Xie, Yu Xiang, Arsalan Mousavian, and Dieter Fox.

Conference on Robot Learning – CoRL, 2019.

Unseen Object Instance Segmentation for Robotic Environments.

Christopher Xie, Yu Xiang, Arsalan Mousavian, and Dieter Fox.

IEEE Transactions on Robotics – T-RO, 2021.

Code and Models

Our code and models are public and can be found here.

Video

Contact : chrisxie at cs dot washington dot edu

Last update : 09/25/2019